Posts

3368Following

712Followers

1579buherator

buheratorbuherator

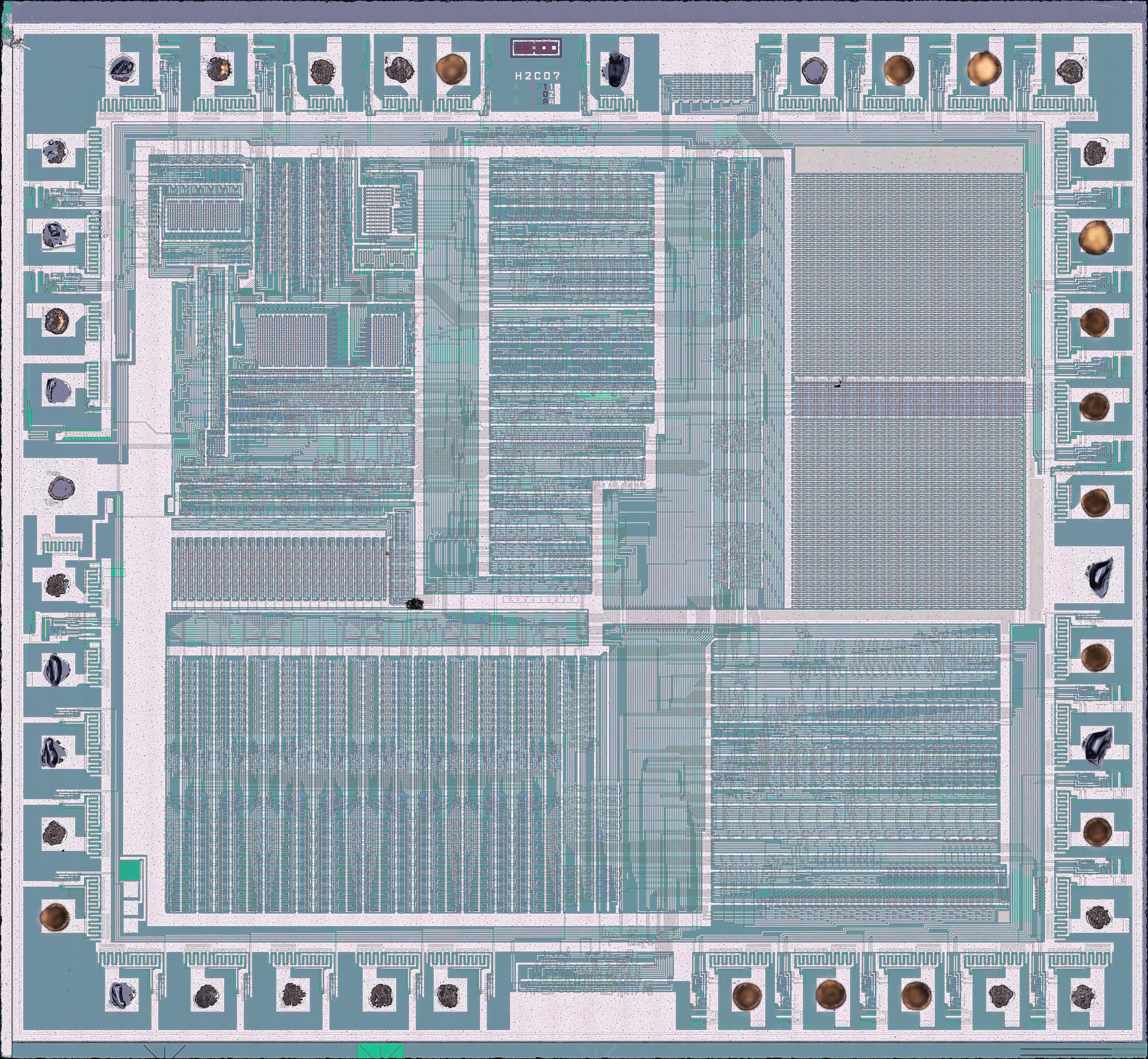

buheratorYo all, it is Friday now where I am, so might as well get the #nakeddiefriday thing going.

Today's guest is the famous NES PPU chip, RP2C07A by Ricoh. What's interesting about this particular sample is that it's very very dead. Many thanks to @root42 for supplying it!

As always, a short thread follows. Why not give this one a boost while you're here? :D

SiPron page for those hi-res maps we all love: https://siliconpr0n.org/archive/doku.php?id=infosecdj:ricoh:rp2c07a

Note the die is oriented the same way Visual 2C02 has it: https://www.nesdev.org/wiki/Visual_2C02

Addison

addison@nothing-ever.worksNew rant about fuzzing just dropped: https://addisoncrump.info/research/consideration-of-input-shapes/

Andrew Zonenberg

azonenberg@ioc.exchangeFor all the marketing advice LinkedIn MBAs come up with, a surprising number of people forget one of the most basic steps: The Middle Schooler Test.

The process is simple:

1) Place a sample of your brochure, website, etc. in front of a recently calibrated 12-year-old boy.

2) If they start giggling uncontrollably, identify and correct the source of their amusement. Return to step 1.

3) If they aren't particularly amused, move on with the campaign.

David Chisnall (*Now with 50% more sarcasm!*)

david_chisnall@infosec.exchangeI keep reading ‘AI isn’t going away’, but I don’t think the people saying it have thought through the economics.

An LLM is, roughly speaking, two parts. One defines the structure of the model: the kinds of layers, their arrangement, and the connections between them. The other is the weights. The first part is quite similar to any other software artefact. Once you have a copy of it, it keeps working. This is the cheap bit to build, but the tricky bit to design.

The weights are the result of training. You need to throw a lot of data and a lot of compute at a system to create the weights. Once you have done this, you can use them indefinitely. The problem is that the weights include all of the data that is embedded into a model.

If you train a model today to use for programming, it will embed almost nothing about C++26, for example. If you train it on news, it will not be able to answer any questions about things that happened after today. Weights from today quickly become outdated.

This is one of the big costs for LLM vendors. Just as a snapshot of Google or Bing’s index rapidly decays in value and needs constantly updating, so do LLM weights.

Training these things costs a lot of money (DeepSeek claims only a few tens of millions, but it’s not clear the extent to which that was an accounting trick: how many millions did they spend training models that didn’t work?). For ‘AI’ companies, this cost is a feature. It s a barrier to entry in the market. You need to have a load of data (almost all of which appears to have been used without consent) and a huge pile of very expensive GPUs to do the training. All of this is predicated on the idea that you can then sell access to the models and recoup the training costs (something that isn’t really working, because the inference costs are also high and no one is willing to pay even the break-even price for these things).

So if the companies building these things speculatively can’t make money, what happens? Eventually, they burn through the capital that they have available. Some weights are published (e.g. LLaMA), but those will become increasingly stale. Who do you expect to spend real money (and legal liability) training LLMs for no projected return?

If you’re a publicly traded company and are building anything around LLMs, you probably had a legal duty to disclose these risks to your shareholders.

hackaday

hackaday@hackaday.socialRediscovering Microsoft’s Oddball Music Generator From The 1990s

https://hackaday.com/2025/08/14/rediscovering-microsofts-oddball-music-generator-from-the-1990s/

chiefpie

cplearns2h4ck@bird.makeupSome of my bugs are patched in this month's patch tuesday, including the ones I used for Pwn2Own Berlin 2025.

CVE-2025-50167 Race UAF in Hyper-V

Taggart

mttaggart@infosec.exchangeAnother day, another Cisco perfect 10.

This vulnerability is due to a lack of proper handling of user input during the authentication phase. An attacker could exploit this vulnerability by sending crafted input when entering credentials that will be authenticated at the configured RADIUS server.

I think @cR0w needs to start a perfect-10 leaderboard. Wagers accepted.

Matt Blaze

mattblaze@federate.socialAnother bad user interface (MMS, which allows you to add any random phone number to a group chat) used by law enforcement, leading to inevitable mistakes.

https://infosec.exchange/@josephcox/115028172632972848

Until very recently (like 2 or 3 years ago), federal agents routinely misconfigured the encryption settings on their two way radios, leading to sensitive traffic (much like that in the linked 404media article) going out in the clear more often than not.

buherator

buheratorhttps://blog.exploits.club/exploits-club-weekly-newsletter-82-synology-decryption-ai-thought-traces-fortiweb-auth-bypasses-and-more/

buherator

buheratorhttps://reverseengineering.stackexchange.com/q/29477/3814

Anyone dreamed up a solution to this?

#Ghidra

Sven Slootweg, low-spoons mode ("still kinky and horny anyway")

joepie91@slightly.techEveryone who is able to come back to #WHY2025, we are short-staffed on teardown volunteers, so *please* show up to help, either today (during daylight) or tomorrow. Given the shortage, even if this toot was a couple of hours ago by the time you read it, it will probably still be necessary, so please show up!

Gina Häußge

foosel@chaos.social20 years in between these Phrack releases 😊 Got the small one at WTH2005 and the larger one at #why2025 😄

Adam Shostack

adamshostack@infosec.exchange

If someone wants to commit to buying the answer, locking it in a safe deposit box and throwing away the key, I'll throw $50 at the effort.

John Schwartz

jswatz_tx@threads.netThe plaintext of Kryptos, the mysterious statue at the heart of CIA headquarters, is for up for sale to the highest bidder. Here's my story: https://www.nytimes.com/2025/08/14/science/kryptos-sculpture-cia-solution-auction.html?unlocked_article_code=1.eE8.m90H.Onsi2at1i2_U&smid=url-share

hackaday

hackaday@hackaday.socialHow The Widget Revolutionized Canned Beer

https://hackaday.com/2025/08/14/how-the-widget-revolutionized-canned-beer/