Posts

3463Following

717Followers

1582404 Media

404mediaco@mastodon.socialAn Oklahoma man tried to talk about a data center coming to his community. Police arrested him when he went a few seconds over his time limit.

https://www.404media.co/man-opposing-data-center-arrested-for-speaking-slightly-too-long/

buherator

buheratorSome days I'm so fucking tired of what IT has become...

Adfichter

adfichter@infosec.exchangeThe Deal of OpenAI with the US-Department of War:

"For intelligence activities, any handling of private information will comply with the Fourth Amendment, the National Security Act of 1947 and the Foreign Intelligence and Surveillance Act of 1978, Executive Order 12333, and applicable DoD directives requiring a defined foreign intelligence purpose."

Make sure you delete your ChatGPT Account today.

https://openai.com/index/our-agreement-with-the-department-of-war/

buherator

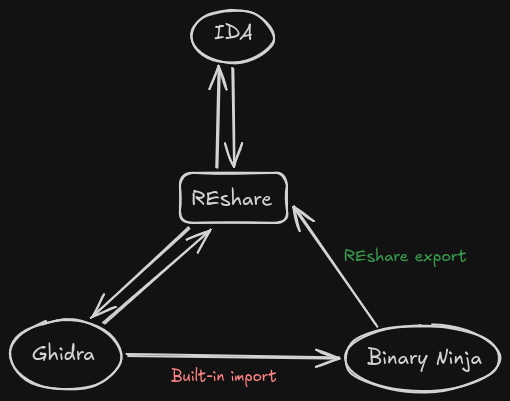

buheratorhttps://github.com/v-p-b/reshare-ninja

Since Binary Ninja supports importing Ghidra projects, technically there is now a conversion path between three major #ReverseEngineering frameworks, but of course I plan to create a REshare importer as well.

Note that there are certainly a *lot* of edge-cases that are not properly handled yet - issues and PRs are welcome as always!

B'ad Samurai 🐐🇺🇦

badsamurai@infosec.exchangeRE: https://chaos.social/@jonty/116153964612980511

It’s not just slop anymore. Beg bounty is a fraud vector.

cynicalsecurity

cynicalsecurity@bsd.network

A colleague and good friend of mine, with whom I have worked for 32 years (seriously), is looking for a job in one of The Netherlands, Belgium, Germany and Switzerland. They are fluent in English, German and Dutch, speaks French too! German nationality.

They have been managing complex IT projects for decades, are an excellent programmer and used to managing large development teams. Was doing "agile" when it was still called XP and pair programming (I used to make fun of them calling it 🍐 programming ;P … geek humour, sorry).

If you have anything I can send their way I'd be grateful. Please don't bother if you are ageist 'cos they're somewhat older than me and I am a greybeard (yes, they speak FORTRAN).

buherator

buherator- spend more than 30s

- answer trick questions

- write essays

- give out my PII

Rik Viergever

rikviergever@mastodon.socialI'm looking for people working at a bank in Europe who are interested in #EthicalTech and who would be interested to help us ensure that banking apps function well on European operating systems. Do you know anyone who might be interested? Please reach out to me via DM!

#DigitalSovereignty #banking #FairTech #banks #finance #tech #buyEU #DMA #DSA #SocEnt #EUtech

#Revolut #bunq #Curve #WERO #IDEAL #ING #ABNAMRO #ASN #Triodos #Rabobank #UBS #HSBC #BNP #Paribas #N26 #Wise #Qonto #BNP #Paribas

vermaden

vermaden@bsd.cafeMISSION: Save Myrient (https://myrient.erista.me/)

DEADLINE: 30 days.

HARDWARE:

- 2500 USD:

--- 1 x Supermicro SSG-6029P-E1CR24L [1]

- 10600 USD: (20 x 530 USD)

--- 20 x HDD 3.5 Seagate Exos 24TB

SOFTWARE:

- FreeBSD along with redundant ZFS (RAIDZ2 or DRAID) with ZSTD compression

TOTAL COST:

- 13500 USD

Maybe some company come with the needed budget.

I can do the FreeBSD/ZFS part for free.

buherator

buherator"Careless big-time users are treating FOSS repos like content delivery networks"

https://www.theregister.com/2026/02/28/open_source_opinion/

Illustrative joke:

Little girl: Ice cream man, how much is for an empty cone?

Ice cream man: Oh I'll give that to you for free :)

Little girl: Great, then I'll have 5000 empty cones!

Of course, LLMs are another example of this phenomenon.

buherator

buheratorMe after sleep: The code actually handles the special case, I just commented out the relevant part for some reason...

Also #ProTip: Always `git status` after getting back to your after some time

If I use a LLM on a tiny bit of a 0day exploit, is that an AI enabled cyber weapon?

Catalin Cimpanu

campuscodi@mastodon.socialSecurity firm Trail of Bits has released mquire, a Linux memory forensics tool that works without any external dependencies

https://blog.trailofbits.com/2026/02/25/mquire-linux-memory-forensics-without-external-dependencies/

buherator

buheratorhttps://www.mdsec.co.uk/2026/02/total-recall-retracing-your-steps-back-to-nt-authoritysystem/

cpresser

cccpresser@chaos.socialNew #NameThatWare challenge. I did repair this today at work.

Please hide your deductions and guesses behind a CW to not spoil it for others. Googling is fair game.

Please don't just write a single word as answer, instead describe your observations and deductions so we all can learn about electronics.

If you are familiar with this kind of device, try to figure out the specific make and model instead of just saying something like 'Audio amplifier'.

Solution will be posted on Monday.

Harry Sintonen

harrysintonen@infosec.exchangeThis should be obvious for everyone by now, but if you're not from US you must assume that all your use of US AI services (#ChatGPT, #Claude, #Gemini etc) is fed directly to US intelligence services.

"We may share your Personal Data, including information about your interaction with our Services, with government authorities ... in compliance with the law (i)" (OpenAI)

"We may disclose personal data to governmental regulatory authorities as required by law" (Claude)

"We will share personal information outside of Google ... to: Respond to any applicable law, regulation, legal process, or enforceable governmental request" (Gemini)

The amount of valuable information fed to the systems voluntarily is staggering. It's not a matter of "if" it is happening, but "of course it is". It would be outright negligent if they weren’t capturing and disseminating it all.

https://en.wikipedia.org/wiki/Foreign_Intelligence_Surveillance_Act#Without_a_court_order

Jan Schaumann

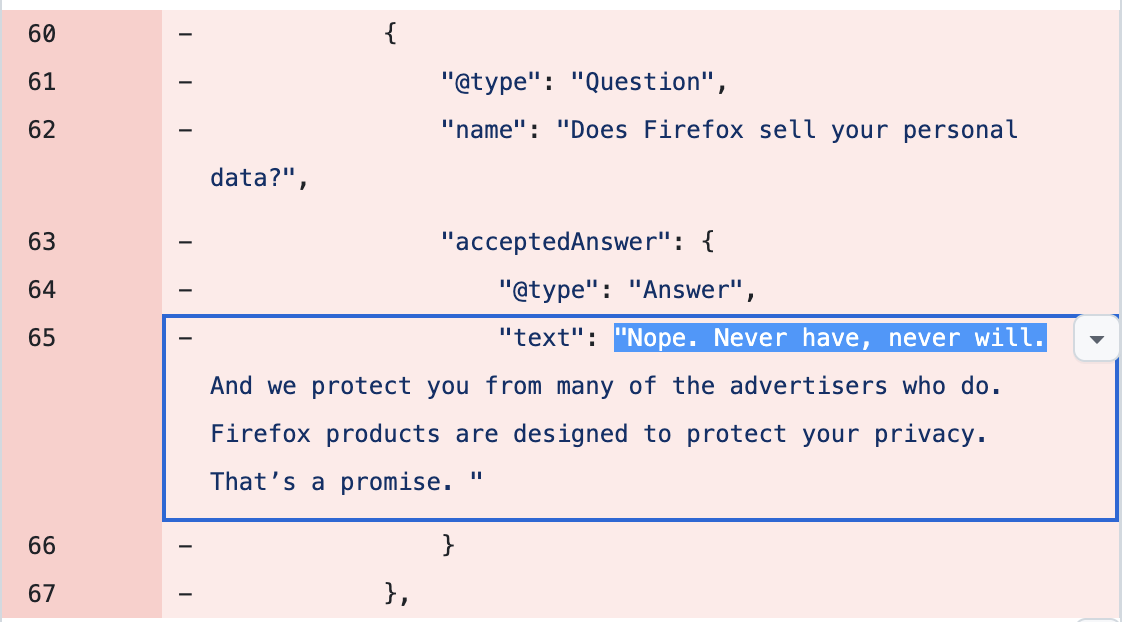

jschauma@mstdn.social"Never have, never will." Promise, shmomise.

This is some bullshit, Mozilla.

And the explanation is bullshit, too, and sounds rather annoyed at having to explain to us silly users that *of course* you have to "share some data with our partners".