Posts

3402Following

713Followers

1581David Chisnall (*Now with 50% more sarcasm!*)

david_chisnall@infosec.exchangeI finally turned off GitHub Copilot yesterday. I’ve been using it for about a year on the ‘free for open-source maintainers’ tier. I was skeptical but didn’t want to dismiss it without a fair trial.

It has cost me more time than it has saved. It lets me type faster, which has been useful when writing tests where I’m testing a variety of permutations of an API to check error handling for all of the conditions.

I can recall three places where it has introduced bugs that took me more time to to debug than the total time saving:

The first was something that initially impressed me. I pasted the prose description of how to communicate with an Ethernet MAC into a comment and then wrote some method prototypes. It autocompleted the bodies. All very plausible looking. Only it managed to flip a bit in the MDIO read and write register commands. MDIO is basically a multiplexing system. You have two device registers exposed, one sets the command (read or write a specific internal register) and the other is the value. It got the read and write the wrong way around, so when I thought I was writing a value, I was actually reading. When I thought I was reading, I was actually seeing the value in the last register I thought I had written. It took two of us over a day to debug this. The fix was simple, but the bug was in the middle of correct-looking code. If I’d manually transcribed the command from the data sheet, I would not have got this wrong because I’d have triple checked it.

Another case it had inverted the condition in an if statement inside an error-handling path. The error handling was a rare case and was asymmetric. Hitting the if case when you wanted the else case was okay but the converse was not. Lots of debugging. I learned from this to read the generated code more carefully, but that increased cognitive load and eliminated most of the benefit. Typing code is not the bottleneck and if I have to think about what I want and then read carefully to check it really is what I want, I am slower.

Most recently, I was writing a simple binary search and insertion-deletion operations for a sorted array. I assumed that this was something that had hundreds of examples in the training data and so would be fine. It had all sorts of corner-case bugs. I eventually gave up fixing them and rewrote the code from scratch.

Last week I did some work on a remote machine where I hadn’t set up Copilot and I felt much more productive. Autocomplete was either correct or not present, so I was spending more time thinking about what to write. I don’t entirely trust this kind of subjective judgement, but it was a data point. Around the same time I wrote some code without clangd set up and that really hurt. It turns out I really rely on AST-aware completion to explore APIs. I had to look up more things in the documentation. Copilot was never good for this because it would just bullshit APIs, so something showing up in autocomplete didn’t mean it was real. This would be improved by using a feedback system to require autocomplete outputs to type check, but then they would take much longer to create (probably at least a 10x increase in LLM compute time) and wouldn’t complete fragments, so I don’t see a good path to being able to do this without tight coupling to the LSP server and possibly not even then.

Yesterday I was writing bits of the CHERIoT Programmers’ Guide and it kept autocompleting text in a different writing style, some of which was obviously plagiarised (when I’m describing precisely how to implement a specific, and not very common, lock type with a futex and the autocomplete is a paragraph of text with a lot of detail, I’m confident you don’t have more than one or two examples of that in the training set). It was distracting and annoying. I wrote much faster after turning it off.

So, after giving it a fair try, I have concluded that it is both a net decrease in productivity and probably an increase in legal liability.

Discussions I am not interested in having:

- You are holding it wrong. Using Copilot with this magic config setting / prompt tweak makes it better. At its absolute best, it was a small productivity increase, if it needs more effort to use, that will be offset.

- This other LLM is much better. I don’t care. The costs of the bullshitting far outweighed the benefits when it worked, to be better it would have to not bullshit, and that’s not something LLMs can do.

- It’s great for boilerplate! No. APIs that require every user to write the same code are broken. Fix them, don’t fill the world with more code using them that will need fixing when the APIs change.

- Don’t use LLMs for autocomplete, use them for dialogues about the code. Tried that. It’s worse than a rubber duck, which at least knows to stay silent when it doesn’t know what it’s talking about.

The one place Copilot was vaguely useful was hinting at missing abstractions (if it can autocomplete big chunks then my APIs required too much boilerplate and needed better abstractions). The place I thought it might be useful was spotting inconsistent API names and parameter orders but it was actually very bad at this (presumably because of the way it tokenises identifiers?). With a load of examples with consistent names, it would suggest things that didn't match the convention. After using three APIs that all passed the same parameters in the same order, it would suggest flipping the order for the fourth.

clearbluejar

clearbluejar@infosec.exchangeExciting! My talk recording just dropped from #OBTS v7! 🗣️✨ Learn how to patch diff on Apple with #Ghidra, #ghidriff, and #ipsw: "Patch Different on *OS": https://www.youtube.com/watch?v=Ellb76t7nrc

Alexander Popov

a13xp0p0v@infosec.exchangeSlides for my talk at H2HC 2024:

🤿 Diving into Linux kernel security 🤿

I described how to learn this complex area and knowingly configure the security parameters of your Linux-based system.

And I showed my open-source tools for that purpose!

https://a13xp0p0v.github.io/img/Alexander_Popov-H2HC-2024.pdf

buherator

buheratorhttps://github.com/silentsignal/oracle_forms/

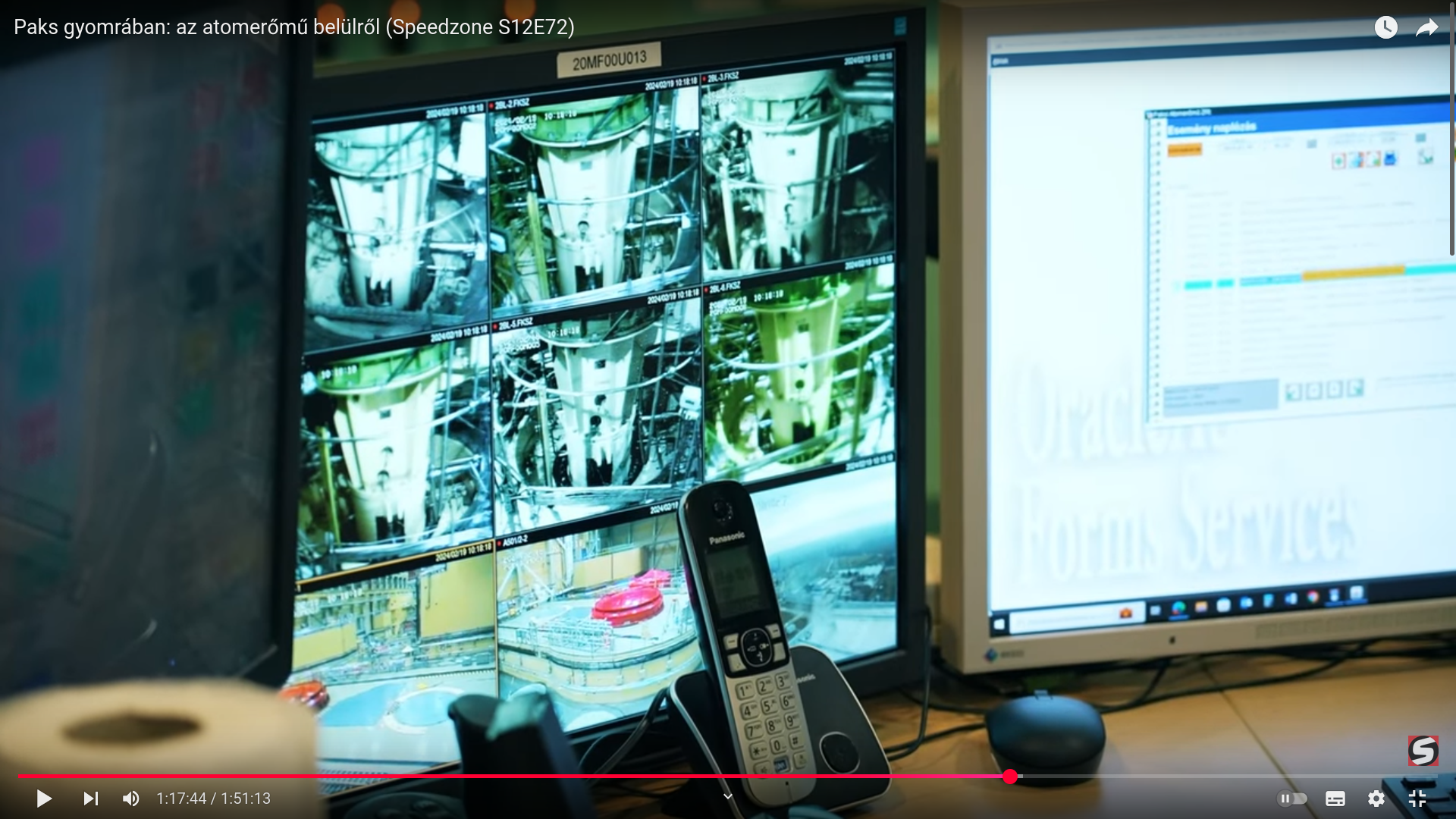

I've always thought Forms is a niche in enterprise IT that's slowly dying out (for good), until I saw this video about our local nuclear power plant o.O

https://youtu.be/xsOAjgFLImg?si=_FJsd7EoEC1J3gim&t=4660

Charlie Stross

cstross@wandering.shopWIRED article forecasting the generative AI bubble will burst in 2025. This is more optimistic than my own expectations, but if WIRED are printing it, it's the direction sentiment in Silicon Valley is running in.

(Hint: there's gold in AI, but it's in *analytical* AI, aka big data, not stochastic parrot bullshit.)

https://www.wired.com/story/generative-ai-will-need-to-prove-its-usefulness/

buherator

buheratorLukasz Olejnik

LukaszOlejnik@mastodon.socialMy strategic privacy analysis. Is Google undoing a decade of progress on privacy? Their new policy allows invasive device fingerprinting for tracking user activity. Here’s my deep dive into what this means for privacy—and the future of AI. https://blog.lukaszolejnik.com/biggest-privacy-erosion-in-10-years-on-googles-policy-change-towards-fingerprinting/

GEBIRGE

GEBIRGE@infosec.exchangeMy first article for @mogwailabs_gmbh just released. Thanks to @h0ng10 for making it happen. 🥳

Taggart

mttaggart@infosec.exchangeSeems like a mitigation for a Tomcat TOCTOU vuln was incomplete.

(H/t) @AAKL

Taggart

mttaggart@infosec.exchangeDoes Tidal compensate artists fairly? I'm ready to ditch Spotify, but I'd like to do it the right way.

screaminggoat

screaminggoat@infosec.exchangeSophos security advisory 19 December 2024: Resolved Multiple Vulnerabilities in Sophos Firewall (CVE-2024-12727, CVE-2024-12728, CVE-2024-12729)

- CVE-2024-12727 (9.8 critical) pre-auth SQL injection vulnerability in the email protection feature of Sophos Firewall

- CVE-2024-12728 (9.8 critical) weak credentials vulnerability potentially allows privileged system access via SSH to Sophos Firewall

- CVE-2024-12729 (8.8 high) post-auth code injection vulnerability in the User Portal allows authenticated users to execute code remotely in Sophos Firewall

Sophos has not observed these vulnerabilities to be exploited at this time.

#sophos #firewall #vulnerability #cve #infosec #cybersecurity

buherator

buheratorhttps://www.ibm.com/support/pages/node/7179509

Ars Technica

arstechnica@mastodon.socialWhy AI language models choke on too much text

Compute costs scale with the square of the input size. That's not great.

https://arstechnica.com/ai/2024/12/why-ai-language-models-choke-on-too-much-text/?utm_brand=arstechnica&utm_social-type=owned&utm_source=mastodon&utm_medium=social

Aral Balkan

aral@mastodon.ar.alHeads up: Folks on #Codeberg

You might get an email belittling your project, seemingly from Michael Bell (mikedesu) via noreply@codeberg.org (an issue is created on your repo and then deleted, leading to the notification).

This appears to be part of a smear campaign someone is running that started on GitHub. e.g., see:

CC: @Codeberg – hope you can identify the account(s) responsible and block them. Example (deleted) issue: https://codeberg.org/kitten/app/issues/216

(🇨🇦 again 20 March)

(🇨🇦 again 20 March)