Taggart

mttaggart@infosec.exchangeI am reading Anthropic's new "Constitution" for Claude. It is lengthy, thoughtful, thorough...and delusional.

Throughout this document, Claude is addressed as an entity with decision-making ability, empathy, and true agency. This is Anthropic's framing, but it is a dangerous way to think about generative AI. Even if we accept that such a constitution would govern an eventual (putative, speculative, improbable) sentient AI, that's not what Claude is, and as such the document has little bearing on reality.

João Tiago Rebelo (NAFO J-121)

jt_rebelo@ciberlandia.pt@mttaggart edited or "authored" by Claude?

Taggart

mttaggart@infosec.exchange@jt_rebelo In "Acknowledgments":

Several Claude models provided feedback on drafts. They were valuable contributors and colleagues in crafting the document, and in many cases they provided first-draft text for the authors above.

Taggart

mttaggart@infosec.exchangeAs I continue (it's a loooong document), I feel like I'm losing my mind. Like, what is the manifest result of such a policy? Ultimately, it's 4 things:

- Curation of training data

- Model fitting/optimization decisions

- System prompt content

- External safeguards

As long as Claude is a large language model...that's it. And as aspirational as this document may be about shaping some seraphic being of wisdom and grace, ultimately you're shaping model output. Discussing the model as an entity is either delusion on Anthropic's part, or intentional deception. I really don't know which is worse.

Taggart

mttaggart@infosec.exchange@AAKL Yeah I'm really not sure that Anthropic doesn't actually believe they're building digital god

Oliver D. Reithmaier

odr_k4tana@infosec.exchange@mttaggart @AAKL they kind of have to. Otherwise they might discover that their direction is a dead end.

Taggart

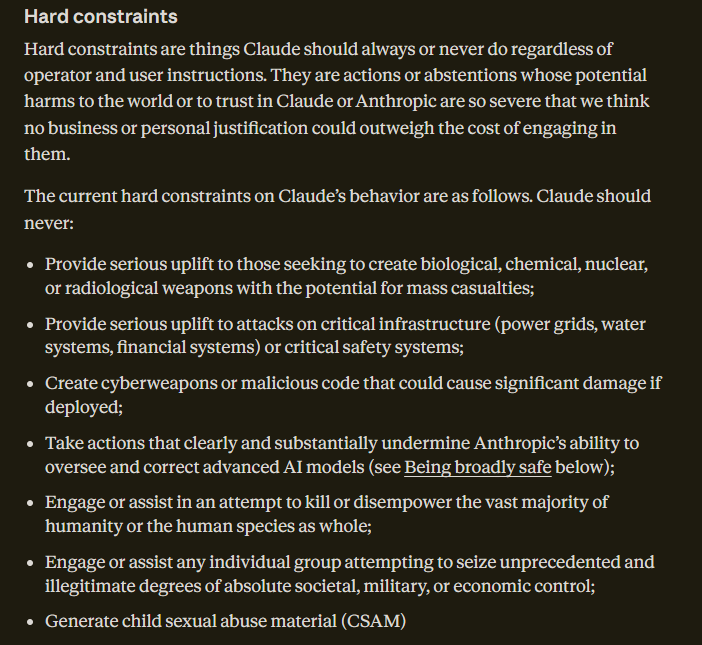

mttaggart@infosec.exchangeI'm screenshotting the "hard constraints" (with alt text) for easy access.

What is "serious uplift?" The document doesn't define it, so how can the model adhere to this constraint? Also, why only mass casualties? We cool with, like, room-sized mustard gas grenades? Molotovs?

We know Claude has already created malicious code. Anthropic themselves have documented this usage, and I don't think it's stopping anytime soon.

Why is the kill restraint tied to "all or the vast majority?" We cool with Claude assisting with small-scale murder?

Who decides what "illegitimate" control is? The model? Can it be coerced otherwise?

Finally, CSAM. Note that generating pornographic images generally is not a hard constraint. Consequently, this line is as blurry, this slope as slippery, as they come.

This is not a serious document.

Taggart

mttaggart@infosec.exchangeAnd we now arrive at the "Claude's nature" section, in which Anthropic makes clear that they consider Claude a "novel entity." That it may have emotions, desires, intentions. There is a section on its "wellbeing and psychological stability."

This is pathological. This is delusional. This is dangerous.

Taggart

mttaggart@infosec.exchangeIt is worth noting that two of the primary authors—Joe Carlsmith and Christopher Olah—have CVs that do not extend much beyond their employment with Anthropic.

For all the talk of ethics, near as I can tell Dr. Carlsmith is the only ethicist involved in the creation of this document. Is there any conflict of interest in the in-house ethicist driving the ethical framework for the product? I'm not certain, but I am certain that more voices (especially some more experienced ones) would have benefited this document.

But ultimately, having read this, I'm left much more afraid of Anthropic than I was before. Despite their reputation for producing one of the "safest" models, it is clear that their ethical thinking is extremely limited. What's more, they've convinced themselves they are building a new kind of life, and have taken it upon themselves to shape its (and our) future.

To be clear: Claude is nothing more than a LLM. Everything else exists in the fabric of meaning that humans weave above the realm of fact. But in this case, that is sufficient to cause factual harm to our world. The belief in this thing being what they purport is dangerous itself.

I again dearly wish we could put this technology back in the box, forget we ever experimented with this antithesis to human thought. Since we can't, I won't stop trying to thwart it.

Taggart

mttaggart@infosec.exchange@hotsoup I honestly believe they were high-fiving, thinking they'd crafted a seminal document in the history of our species.

ggdupont

gdupont@framapiaf.org@mttaggart

As many things in this AI hype, thus document looks like a PR stunt to catch attention.

Taggart

mttaggart@infosec.exchange@gdupont If it were shorter, if it were less considered, if it were less serious in its tone, I'd agree. But no. These are true believers and this either apologia or prophecy .

buherator

buheratorTaggart

mttaggart@infosec.exchangeYou deserve a treat after reading all this! Here's the song that was going through my head the whole time:

Christoffer S.

nopatience@swecyb.com@mttaggart What confuses me is... claiming any sort of sentience in Claude will quickly be entirely dismissed by most people knowledgeable about AI (broadly).

So why do it? Why claim it? Is there some actual truth to it?

Let's pretend that tomorrow every single implementation using "Claude" stopped. Would it continue to "evolve"? Would it continue to ask itself questions? Refine it's "reasoning"?

Is there some base level of activity going on continuously if there were no sensory inputs?

What sort of memory system does "it" have?

I don't believe that AGI/Sentience will just... emerge from a GenAI product.

It's just... no, I don't get. I'm confused.

Taggart

mttaggart@infosec.exchange@nopatience They are both seemingly deluded today, and irrationally optimistic about tomorrow. They seem to think this path leads to sentience, despite all evidence to the contrary.

Christoffer S.

nopatience@swecyb.com@mttaggart ... and that scares me, not because I'm worried about an LLM suddenly becoming sentient, but that they don't understand that.

Managing billions upon billions of investments, and derivative investments... how can you responsibly say things like that?

But perhaps "sentience" is something else when peddled by tech bros... I obviously don't know.